Prompting with Co-Star:

Bringing structure to AI interactions

Last updated: 12.11.2025 13:00

Large language models provide impressive answers – but not always the right ones. The Co-Star framework shows how companies can get more precision and consistency out of AI with smart prompting.

Anyone who works with large AI language models (LLMs) knows the challenge: they react quickly and formulate convincingly, but are not always correct or reliable. This is not enough for productive use in companies. It is crucial that the AI repeatedly performs precisely what is required. The key to this lies in prompting, i.e. the way in which tasks are formulated for a model. The Co-Star framework is a tried-and-tested procedure for achieving structure and consistency.

Why prompting is crucial

LLMs are trained to give an answer in almost any context. Although this appears flexible and helpful at first glance, it leads to typical problems:

For AI to be reliable in everyday work, it needs prompts that delimit the context and provide clear instructions for action. To this end, there are numerous prompting guidelines that make the LLM narrow down the huge context and only deal with a specific task. One of these prompting frameworks, which we also use internally, is called "Co-Star", which won a prompt engineering competition in Singapore in 2023.

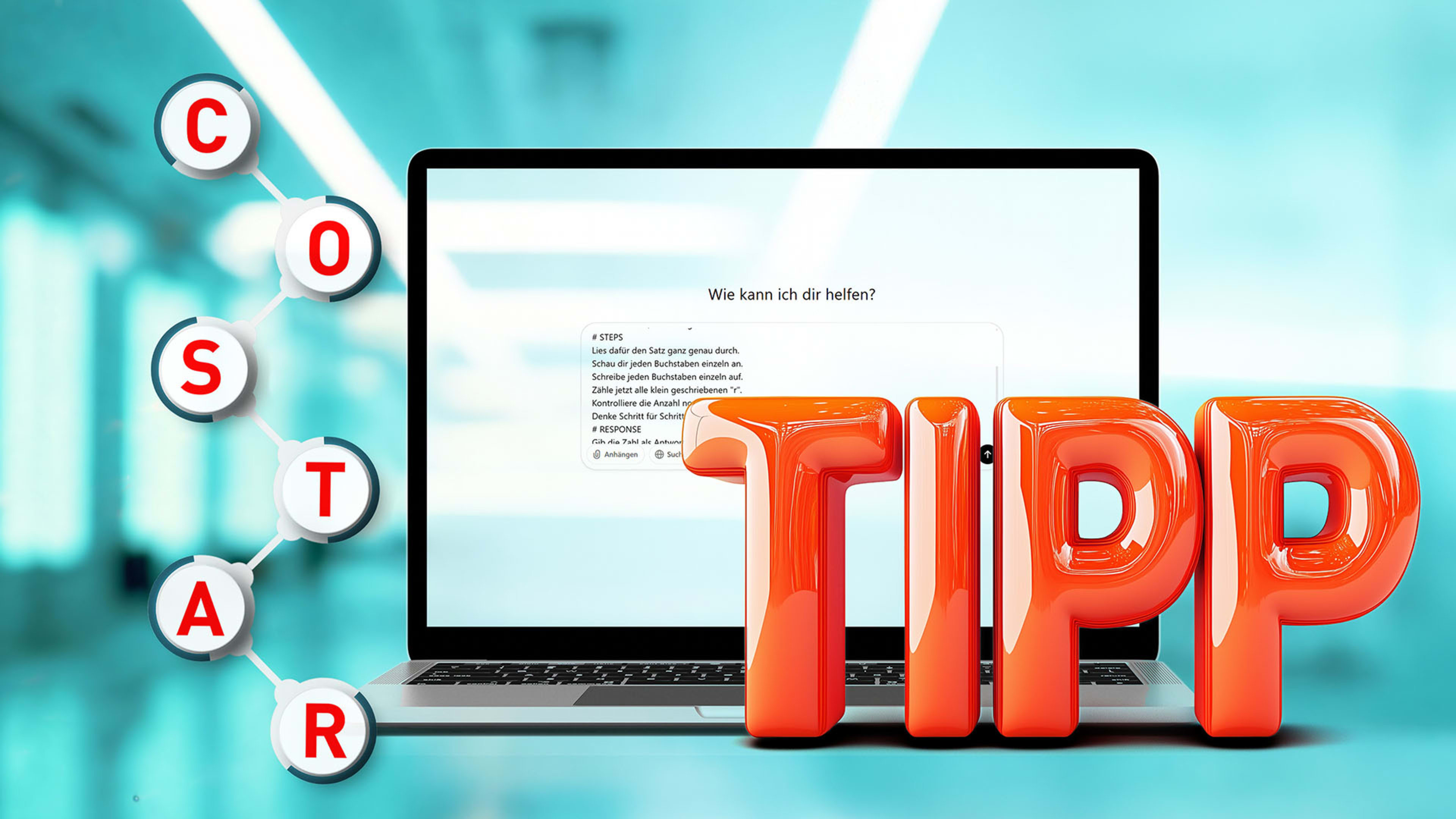

The Co-Star framework: Structure and principles

The Co-Star framework provides a structured template for prompting. It consists of six building blocks:

Context

Objective

Style

Tone

Audience (target group)

Response format (format of the response)

It is actually more for the creative area, e.g. for writing blog posts. But with minor adjustments, it is also suitable for precise applications such as those we need in customer service. We have therefore developed an adapted Co-Star framework for prompting internally.

Adapted Co-Star prompting for customer service

For a precise application, as we need it in customer service, we focus more on the steps to be performed and have combined tone and style. Our modified Co-Star framework now looks like this:

Context: What role should the model play? In which scenario does it operate?

Objective : Clear and brief definition of the objective: What exactly is to be achieved?

Steps: What steps are necessary to achieve the objective? Break down the individual steps, insert rules and examples.

Tone: What tone of voice is appropriate (e.g. factual, friendly, formal)?

Audience: Who is the response intended for?

Response format: What format should the output be in (e.g. JSON, Markdown, continuous text)?

Comparison: Simple prompt vs. adapted Co-Star

An illustrative example is the task of counting lowercase "r" in a sentence and repeating this request 10 times with GPT 4o mini.

Co-Star in practice: three fields of application

The real strength of the framework becomes apparent in the corporate context. Here are three examples with increasing complexity.

Prompting remains iterative

But even with Co-Star, the first prompt is rarely the best. Prompting is an iterative process that requires systematic testing and optimization. The following best practices have proven themselves in practice:

The Co-Star framework shows how LLMs can be developed from insecure "chatterboxes" into reliable team players through clear structure and step-by-step instructions. Whether for call summaries, pre-qualification of calls or knowledge-based chatbots – Co-Star ensures that AI can be used precisely, consistently and productively.

Prompting is no longer a matter of chance or gut feeling, but a tool that is controllable and verifiable – and thus enables the productive use of AI in the company.

Author:

Dr. Anja Linnenbürger

Head of Research

VIER

Further information

You can get even more exciting insights on this topic in our webinar recording "Prompt your Workforce: How AI becomes a Team Player".